The Hindley-Milner Algorithm is an algorithm for determining the types of expressions. Basically it’s a formalisation of this earlier post. There’s an article on Wikipedia which is frustratingly terse and mathematical. This is my attempt to explain some of that article to myself, and to anyone else who may be interested.

Background

The Hindley-Milner algorithm is concerned with type checking the lambda calculus, not any arbitrary programming language. However most (all?) programming language constructs can be transformed into lambda calculus. For example the lambda calculus only allows variables as formal arguments to functions, but the declaration of a temp variable:

|

|

{ var x = 10; // code that uses x } |

can be replaced by an anonymous function call with argument:

|

|

{ fn(x) { // code that uses x }(10); } |

Similarily the lambda calculus only treats on functions of one argument, but a function of more than one argument can be curried, etc.

Expressions

We start by defining the expressions (e) we will be type-checking:

[bnf lhs=”e”]

[rhs val=”E” desc=”A primitive expression, i.e. 3.”/]

[rhs val=”s” desc=”A symbol.”/]

[rhs val=”λs.e” desc=”A function definition. s is the formal argument symbol and e is the function body (expression).”/]

[rhs val=”(e e)” desc=”The application of an expression to an expression (a function call).”/]

[/bnf]

Types

Next we define our types (τ):

[bnf lhs=”τ”]

[rhs val=”T” desc=”A primitive type, i.e. int.”/]

[rhs val=”τ0 → τ1” desc=”A function of one argument taking type τ0 and returning type τ1“/]

[/bnf]

Requirement

We need a function:

[logic_equation num=1]

[statement lhs=”f(ε, e)” rhs=”τ”/]

[/logic_equation]

where:

[logic_table]

[logic_table_row lhs=”ε” desc=”A type environment.”/]

[logic_table_row lhs=”e” desc=”An expression.”/]

[logic_table_row lhs=”τ” desc=”A type”/]

[/logic_table]

Assumptions

We assume we already have:

[logic_equation num=2]

[statement lhs=”f(ε, E)” rhs=”T” desc=”A set of mappings from primitive expressions to their primitive types (from 3 to int, for example.)”/]

[/logic_equation]

The following equations are logic equations. They are easy enough to read, Everything above the line are assumptions. The statement below the line should follow if the assumptions are true.

Our second assumption is:

[logic_equation num=3]

[assumption lhs=”(s, τ)” op=”∈” rhs=”ε” desc=”If (s, τ) is in ε (i.e. if ε has a mapping from s to τ)”/]

[conclusion lhs=”f(ε, s)” rhs=”τ” desc=”Then in the context of ε, s is a τ”/]

[/logic_equation]

Informally symbols are looked up in the type environment

.

Deductions

[logic_equation num=4]

[assumption lhs=”f(ε, g)” rhs=”τ1 → τ” desc=”If g is a function mapping a τ1 to a τ”/]

[assumption lhs=”f(ε, e)” rhs=”τ1” desc=”and e is a τ1“/]

[conclusion lhs=”f(ε, (g e))” rhs=”τ” desc=”Then the application of g to e is a τ”/]

[/logic_equation]

That is just common sense.

[logic_equation num=5]

[assumption lhs=”ε1” rhs=”ε ∪ (s, τ)” desc=”If ε1 is ε extended by (s, τ), e.g. if s is a τ”/]

[conclusion lhs=”f(ε, λs.e)” rhs=”τ → f(ε1, e)” desc=”Then the output type of a function with argument s of type τ, and body e, is the type of the body e in the context of ε1“/]

[/logic_equation]

This is just a bit tricky. We don’t necessarily know the value of τ when evaluating this expression, but that’s what logic variables are for.

Algorithm

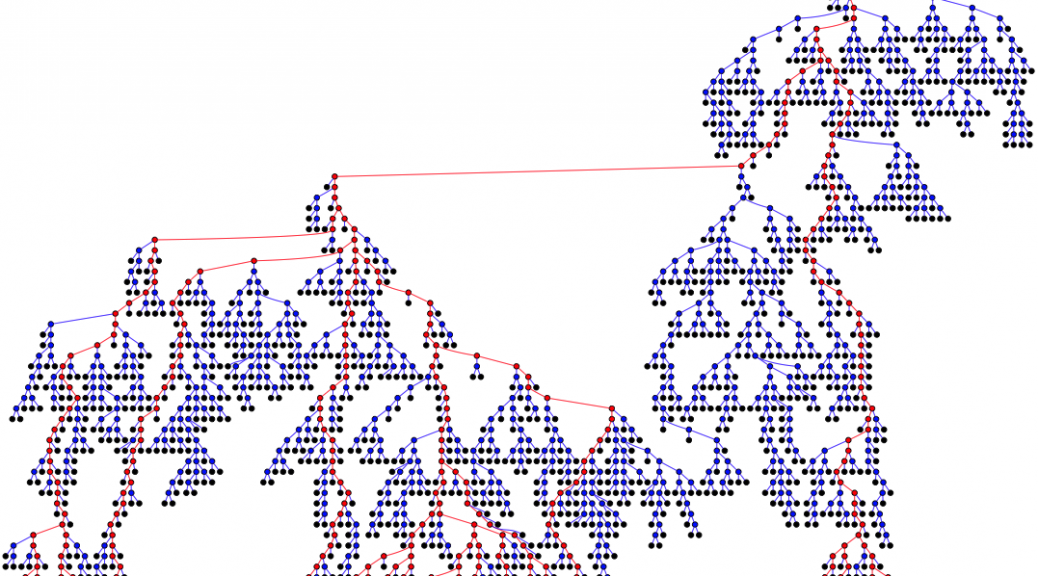

- We extend the set T of primitive types with an infinite set of type variables α1, α2 etc.

- We have a function

new which returns a fresh type variable each time it is called.

- We have a function

eq which unifies two equations.

We modify our function, part [4] (function application) as follows:

[logic_equation num=6]

[assumption lhs=”τ0” rhs=”f(ε, e0)” desc=”If τ0 is the type of e0“/]

[assumption lhs=”τ1” rhs=”f(ε, e1)” desc=”and τ1 is the type of e1“/]

[assumption lhs=”τ” rhs=”new” desc=”and τ is a fresh type variable”/]

[conclusion lhs=”f(ε, (e0 e1))” rhs=”eq(τ0, τ1 → τ); τ” desc=”Then after unifying τ0 with τ1 → τ, the type of (e0 e1) is τ.”/]

[/logic_equation]

That deserves a bit of discussion. We know e0 is a function, so it must have a type τa → τb for some types τa and τb. We calculate τ0 as the provisional type of e0 and τ1 as the type of e1, then create a new type variable τ to hold the type of (e0 e1).

Problem

Suppose e0 is the function length (the length of a list of some unspecified type τ2), then τ0 should come out as [τ2] → int (using [x] to mean list of x

.)

Suppose further that τ1 is char.

We therefore unify:

[logic_equation]

[statement lhs=”{{{τ2}}}” op=”→” rhs=”int“/]

[statement lhs=”{{{char}}}” op=”→” rhs=”τ”/]

[/logic_equation]

Which correctly infers that the type of (length [‘c’]) is int. Unfortunately, in doing so, we permanently unify τ2 with char, forcing length to have permanent type [char] → int so this algorithm does not cope with polymorphic functions such as length.