I’ve gone back to F♮, but decided to abandon the Perl implementation in favour of Java. I might be able to target the Java VM, but I’m not sure yet. In any case it’s a good chance to brush up my Java and learn IntelliJ IDEA (CE). I’m using SableCC to parse, and I’ve just got Log4j working. I’m long overdue writing some unit tests but for the moment, after a week or so, I have a working interpreter and the following sample script that runs as advertised:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 |

// "env foo { ... }" is shorthand for // "def foo = env { ... };". // "env { ... }" extends the current environment, // evaluates the block in // the extended environment, then returns // the extended environment env math { // "fn bar (...) {...}" is shorthand for // "def bar = fn(...) {...};" fn square(x) { x * x } fn adder(x) { fn (y) { x + y } // demonstrates closure // and anonymous functions } env highermath { fn cube(x) { x * square(x) } // finds square in } // the enclosing environment } env badmath { fn square(x) { x + 1; } env highermath { fn square(x) { x * badmath.square(x) } // finds badmath in } // the root environment } def a = 10; // "." is the lookup operator: // lhs is an expression evaluating to an environment, // rhs is asymbol to look up. // "." binds more tightly than "(…)": print( math.square(a) ); // 100 // "if/else" is an expression returning a value, // not a statement // so there is no need for the // ternary "?:" operator. // here "if" returns a function to call: print( if (a == 5) { math.square } else { math.highermath.cube }(a) ); // 1000 // here "if" returns an environment in which // we look up a function: print( if (a == 5) { math } else { badmath.highermath }.square(a) ); // 110 // call a function to get a function // then call that: print(math.adder(5)(22)); // 27 // a string is a linked list of chars // chars are written 'x' // strings are written "abc" // "@" is cons (right associative) // "@@" is append (right associative) // linked lists can also be written between '[' and ']' print( 'a' @ 'b' @ "cd" @@ "ef" @@ ['g', 'h'] ); // "abcdefgh" |

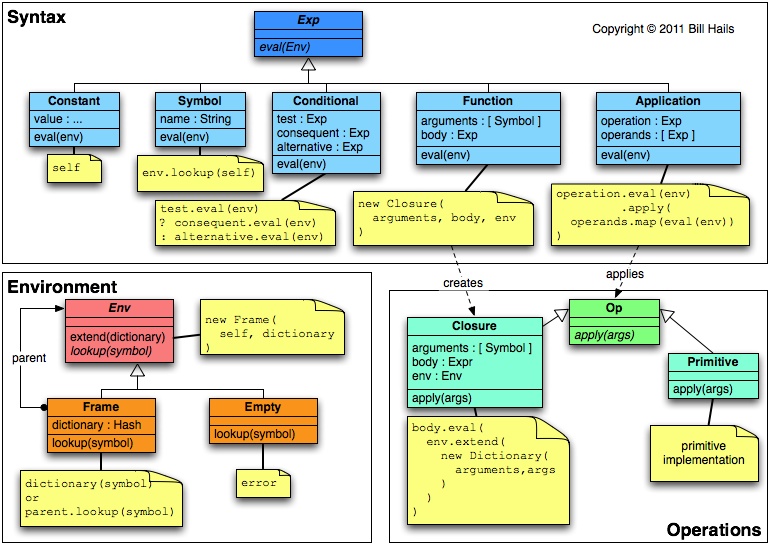

Apart from the env directive, and the fact that strings are lists of chars, this is still very much a scheme interpreter with a more syntax laden parser in front of it, but it’s early days. Next steps:

- Get unit tests in place. I’ve delayed too long.

- Implement an implicit strong type-checking visitor (I’m falling out of love with the Visitor pattern, but SableCC gives me no choice.)

- Replace the variable implementation with the same logic variables used by the type checker.

- Add algebraic data types a la ML. This should look like:

1typedef List(t) { Pair(t, List(t)) | Null }

(except thatListis already predefined.)tis a type variable, so this saysA

Listof some typetis either aPairof atand aListoft, or it isNull.PairandNullbecome type constructors for typeList(t), soPair('c', Null)creates a list of length 1 with typeList(char). Like I said, we already have lists, andh @ tis(cons h t), e.g.Pair(h, t). - Extend the function definition to allow pattern matching (actually unification). This would look like:

1234fn map {(f, []) { [] }(f, h @ t) { f(h) @ map(f, t) }}

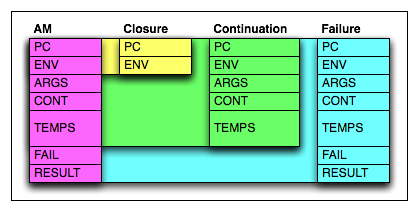

so the formal arguments to the function can optionally be moved inside the function body, and repeated with alternative formats, like a case statement. The format that unifies with the actual arguments has its body evaluated with the relevant variables bound. - Rewrite to CPS, add failure continuations, implement amb as a binary operator

:then

1def name = "Fred" then "Charlie"; - Implement

failas a keyword of no arguments:

1fn require(x) { if (x) { true } else { fail } }

(That last one might give the type checker a headache.)